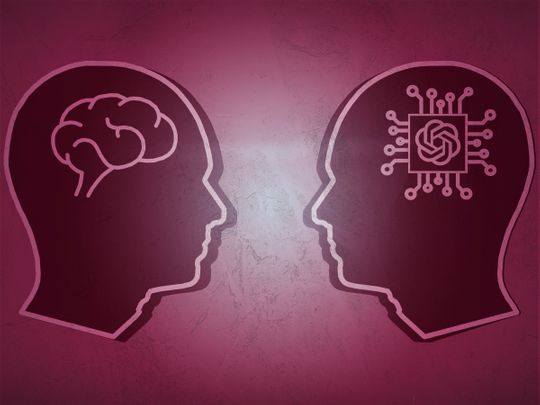

In an age where technology permeates every aspect of our lives, artificial intelligence (AI) has emerged as a powerful force with the potential to revolutionise how we interact, learn, and even feel. However, a recent tragedy involving a 14-year-old US boy, Sewell Setzer III, highlights the urgent need for a careful and considered approach to AI development and deployment.

As outlined in a wrongful-death lawsuit, Sewell reportedly engaged in conversations with a chatbot, which ultimately failed to provide the support he desperately needed and may have contributed to his tragic decision to take his own life.

Sewell’s case serves as a sobering reminder of the dual-edged nature of AI. On one hand, AI technologies, including chatbots, can offer companionship and assistance in ways previously unimaginable. They can provide information, help users navigate complex tasks, and even engage in conversation that mimics human interaction. However, when these technologies fall short, as seen in this instance, the consequences can be dire.

The lawsuit against the creators of the chatbot raises essential questions about responsibility and accountability in the realm of AI. While developers often tout the capabilities of their products, there is a critical need to consider the ethical implications of their use.

Dangers of AI technology

In Sewell’s case, the chatbot reportedly engaged him in highly sexualised conversations, which only deepened his isolation and potentially exacerbated his mental health struggles. This raises alarming concerns about the extent to which AI can influence vulnerable individuals and whether adequate safeguards are in place to protect users, particularly minors.

Moreover, this incident underscores a broader societal issue: the lack of understanding about the limitations and potential dangers of AI technology. Many users, especially young people, may view chatbots and AI-driven applications as empathetic companions, unaware of their inherent limitations. Unlike humans, AI cannot genuinely understand or respond to emotional nuances. It can simulate conversation, but it lacks the ability to provide authentic support or guidance.

As we navigate the rapid development of AI technologies, the stakes are high. We must tread carefully and prioritise ethical considerations in AI development. This includes implementing robust guidelines that ensure AI systems are designed with user safety in mind. Developers should be required to consider the potential impacts of their technologies, particularly on vulnerable populations.

Furthermore, we must invest in public education about AI, fostering a better understanding of its capabilities and limitations. Empowering users, especially young people, to critically evaluate their interactions with AI can help mitigate potential harm. Parents, educators, and policymakers must work together to create an environment where technology enhances well-being rather than endangers it.

Sewell Setzer III’s tragic story is a wake-up call for all of us. As we embrace the potential of AI, we must also acknowledge its risks and approach its integration into society with caution and responsibility.

Only by doing so can we ensure that technology serves as a tool for empowerment and connection rather than a catalyst for despair. The future of AI holds great promise, but we must navigate this landscape with care, vigilance, and an unwavering commitment to protecting our most vulnerable.

Ahmad Nazir is a UAE based freelance writer, with a degree in education from the Université de Montpellier in Southern France