If you listen to its boosters, artificial intelligence is poised to revolutionize nearly every facet of life for the better. A tide of new, cutting-edge tools is already demolishing language barriers, automating tedious tasks, detecting cancer and comforting the lonely.

A growing chorus of doomsayers, meanwhile, agrees AI is poised to revolutionize life - but for the worse. It is absorbing and reflecting society's worst biases, threatening the livelihoods of artists and white-collar workers, and perpetuating scams and disinformation, they say.

The latest wave of AI has the tech industry and its critics in a frenzy. So-called generative AI tools such as ChatGPT, Replika and Stable Diffusion, which use specially trained software to create humanlike text, images, voices and videos, seem to be rapidly blurring the lines between human and machine, truth and fiction.

As sectors ranging from education to health care to insurance to marketing consider how AI might reshape their businesses, a crescendo of hype has given rise to wild hopes and desperate fears. Fueling both is the sense that machines are getting too smart, too fast - and could someday slip beyond our control. "What nukes are to the physical world," tech ethicist Tristan Harris recently proclaimed, "AI is to everything else."

Euphoria and alarm

The benefits and dark sides are real, experts say. But in the short term, the promise and perils of generative AI may be more modest than the headlines make them seem.

"The combination of fascination and fear, or euphoria and alarm, is something that has greeted every new technological wave since the first all-digital computer," said Margaret O'Mara, a professor of history at the University of Washington. As with past technological shifts, she added, today's AI models could automate certain everyday tasks, obviate some types of jobs, solve some problems and exacerbate others, but "it isn't going to be the singular force that changes everything."

Now we have this sort of AI arms race - this race to be the first.

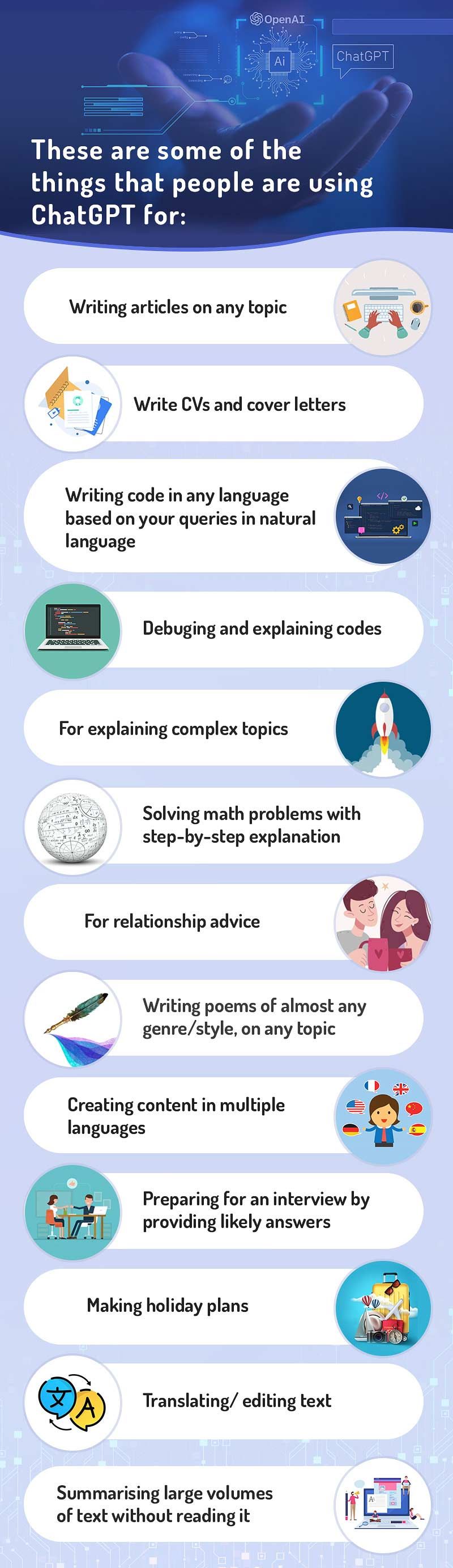

There’s an AI for that: Know the top 10 AI tools you can use now

Chat GPT is one example of an on-going expansion of AI. Other AI tools are now available for you and equally worth exploring. Following are the top 10 on our list:

Midjourney

Tome.app

soundraw.io

Leiapix Converter

Fliki

Fireflies

Runway

NeevaAI

Kaiber

GPT-J

Read the full story

What is ChatGPT and why is everyone talking about it?

Released by OpenAI, a research and deployment company, founded by Elon Musk and Sam Altman in 2015, the firm seeks to deploy artificial general intelligence for the benefit of all humanity.

Read the full story

What's new is the fervor

Neither artificial intelligence nor chatbots is new. Various forms of AI already power TikTok's "For You" feed, Spotify's personalized music playlists, Tesla's Autopilot driving systems, pharmaceutical drug development and facial recognition systems used in criminal investigations. Simple computer chatbots have been around since the 1960s and are widely used for online customer service.

What's new is the fervor surrounding generative AI, a category of AI tools that draws on oceans of data to create their own content - art, songs, essays, even computer code - rather than simply analyzing or recommending content created by humans. While the technology behind generative AI has been brewing for years in research labs, start-ups and companies have only recently begun releasing them to the public.

Nightmare scenario?

Free tools such as OpenAI's ChatGPT chatbot and DALL-E 2 image generator have captured imaginations as people share novel ways of using them and marvel at the results. Their popularity has the industry's giants, including Microsoft, Google and Facebook, racing to incorporate similar tools into some of their most popular products, from search engines to word processors.

Yet for every success story, it seems, there's a nightmare scenario.

ChatGPT's facility for drafting professional-sounding, grammatically correct emails has made it a daily timesaver for many, empowering people who struggle with literacy. But Vanderbilt University used ChatGPT to write a collegewide email offering generic condolences in response to a shooting at Michigan State, enraging students.

ChatGPT and other AI language tools can also write computer code, devise games, and distill insights from data sets. But there's no guarantee that code will work, the games will make sense or the insights will be correct. Microsoft's Bing AI bot has already been shown to give false answers to search queries, and early iterations even became combative with users. A game that ChatGPT seemingly invented turned out to be a copy of a game that already existed.

GitHub Copilot, an AI coding tool from OpenAI and Microsoft, has quickly become indispensable to many software developers, predicting their next lines of code and suggesting solutions to common problems. Yet its solutions aren't always correct, and it can introduce faulty code into systems if developers aren't careful.

Thanks to biases in the data it was trained on, ChatGPT's outputs can be not just inaccurate but also offensive. In one infamous example, ChatGPT composed a short software program that suggested that an easy way to tell whether someone would make a good scientist was to simply check whether they are both White and male. OpenAI says it is constantly working to address such flawed outputs and improve its model.

At San Francisco expo, AI 'sorry' for destroying humanity

Advances in artificial intelligence are coming so hard and fast that a museum in San Francisco, the beating heart of the tech revolution, has imagined a memorial to the demise of humanity.

Read full story

Mimicking the voices of real people

Stable Diffusion, a text-to-image system from the London-based start-up Stability AI, allows anyone to produce visually striking images in a wide range of artistic styles, regardless of their artistic skill. Bloggers and marketers quickly adopted it and similar tools to generate topical illustrations for articles and websites without the need to pay a photographer or buy stock art.

But some artists have argued that Stable Diffusion explicitly mimics their work without credit or compensation. Getty Images sued Stability AI in February, alleging that it violated copyright by using 12 million images to train its models, without paying for them or asking permission.

Stability AI did not respond to a request for comment.

Start-ups that use AI to speak text in humanlike voices point to creative uses like audiobooks, in which each character could be given a distinctive voice matching their personality. The actor Val Kilmer, who lost his voice to throat cancer in 2015, used an AI tool to re-create it.

Now, scammers are increasingly using similar technology to mimic the voices of real people without their consent, calling up the target's relatives and pretending to need emergency cash.

Given how quickly generative AI is developing and how frequently we're learning about new capabilities and risks, staying grounded when talking about these systems feels like a full-time job.

There's a temptation, in the face of an influential new technology, to take a side, focusing either on the benefits or the harms, said Arvind Narayanan, a computer science professor at Princeton University. But AI is not a monolith, and anyone who says it's either all good or all evil is oversimplifying. At this point, he said, it's not clear whether generative AI will turn out to be a transformative technology or a passing fad.

"Given how quickly generative AI is developing and how frequently we're learning about new capabilities and risks, staying grounded when talking about these systems feels like a full-time job," Narayanan said. "My main suggestion for everyday people is to be more comfortable with accepting that we simply don't know for sure how a lot of these emerging developments are going to play out."

The capacity for a technology to be used both for good and ill is not unique to generative AI. Other types of AI tools, such as those used to discover new pharmaceuticals, have their own dark sides. Last year, researchers found that the same systems were able to brainstorm some 40,000 potentially lethal new bioweapons.

More familiar technologies, from recommendation algorithms to social media to camera drones, are similarly amenable to inspiring and disturbing applications. But generative AI is inspiring especially strong reactions, in part because it can do things - compose poems or make art - that were long thought to be uniquely human.

The lesson isn't that technology is inherently good, evil or even neutral, said O'Mara, the history professor. How it's designed, deployed and marketed to users can affect the degree to which something like an AI chatbot lends itself to harm and abuse. And the "overheated" hype over ChatGPT, with people declaring that it will transform society or lead to "robot overlords," risks clouding the judgment of both its users and its creators.

"Now we have this sort of AI arms race - this race to be the first," O'Mara said. "And that's actually where my worry is. If you have companies like Microsoft and Google falling over each other to be the company that has the AI-enabled search - if you're trying to move really fast to do that, that's when things get broken."

ChatGPT, the viral chatbot from OpenAI: Is this the one-stop solution for all your queries?

An Artificial Intelligence (AI) chatbot with the most uncreative name Chat Generative Pre-trained Transformer or ChatGPT for short is making huge waves in the tech community across the world. Some hail it as the bold new frontier. The tool has also raised questions about academic dishonesty, plagiarism, misinformation and biases.

Read Full story